As AI capabilities become essential to competitive business operations, a critical infrastructure question emerges: should you run AI models on your own servers or consume them through cloud APIs? In the debate between Self-Hosted AI and API, this decision significantly affects costs, performance, security, and operational complexity. Understanding the trade-offs helps CTOs make informed choices aligned with business requirements.

This article continues our series on AI cost optimization. For foundational context, see our guides on reducing AI costs and Laravel’s approach to cost-efficient AI integration.

Understanding the Two Approaches

Cloud APIs from providers such as OpenAI, Anthropic, and Google provide immediate access to powerful AI models. You pay per use, receive automatic updates, and avoid the hassle of infrastructure management. Models like GPT-5, Claude Opus 4.6, and Gemini 3 Pro represent billions of dollars in training investment that you can access for pennies per query.

Self-hosted models run on your own infrastructure. Open-source models like Llama 4, Mistral Large 3, and DeepSeek can be deployed on your servers or private cloud. You control the hardware, data flow, and model versions. Costs shift from per-query pricing to infrastructure investment.

Neither approach is universally superior. The right choice depends on your specific requirements, usage patterns, and organizational constraints.

When Cloud APIs Make Sense

For most businesses, cloud APIs remain the practical choice. Several factors favor API consumption over self-hosting.

Variable or Unpredictable Usage

APIs excel when usage fluctuates significantly. A customer service chatbot might handle 100 queries one day and 10,000 the next. Pay-per-use pricing scales naturally with demand. Self-hosted infrastructure must be provisioned for peak capacity, leaving expensive GPU resources idle during periods of low demand.

Access to Frontier Models

The absolute frontier remains proprietary. GPT-5.2, Claude Opus 4.6, and Gemini 3 Pro still lead on the most demanding reasoning and analysis tasks. However, the gap has narrowed dramatically—open-source models like Llama 4 Maverick now match GPT-4-class performance on many benchmarks. If your use case demands the very latest capabilities, APIs remain the fastest path to access them.

Limited AI Engineering Expertise

Self-hosting requires specialized skills: GPU optimization, model quantization, inference optimization, and infrastructure management. Teams without this expertise face steep learning curves and operational risks. APIs abstract away this complexity entirely.

Rapid Experimentation

When exploring AI applications, APIs enable quick iteration. Testing different models, prompting strategies, and use cases requires minimal setup. Self-hosting demands upfront infrastructure investment before experimentation begins.

When Self-Hosting Becomes Compelling

Despite API advantages, specific scenarios make self-hosting the better choice.

High-Volume, Predictable Workloads

The economics flip at scale. Consider a document processing system handling 1 million requests monthly. At API pricing of $3 per million input tokens, costs accumulate quickly. A dedicated GPU server costing $2,000-$5,000 per month can handle the same volume at a fraction of the per-query cost.

The breakeven point varies by model and usage pattern, but typically falls between 500,000 and 2 million monthly requests. Beyond this threshold, self-hosting delivers significant cost advantages that compound over time.

Data Sensitivity and Compliance

Some data cannot leave your infrastructure. Healthcare records, financial information, and classified materials may require processing within controlled environments. Self-hosting ensures data never traverses external networks or resides on third-party servers.

Regulatory requirements such as GDPR, HIPAA, or industry-specific standards may mandate data-residency controls that cloud APIs cannot guarantee. Self-hosting provides the power necessary for compliance.

Latency-Critical Applications

API calls introduce network latency. A round trip to cloud infrastructure adds 50-200 milliseconds regardless of model speed. For real-time applications like voice assistants or interactive gaming, this latency degrades user experience.

Self-hosted models running on local infrastructure eliminate network overhead. Sub-10-millisecond response times become achievable for appropriate model sizes.

Customization Requirements

Self-hosting enables model customization impossible with APIs. Fine-tuning on proprietary data, adjusting the model architecture, or optimizing for specific hardware requires direct access to the model. API providers offer limited customization options.

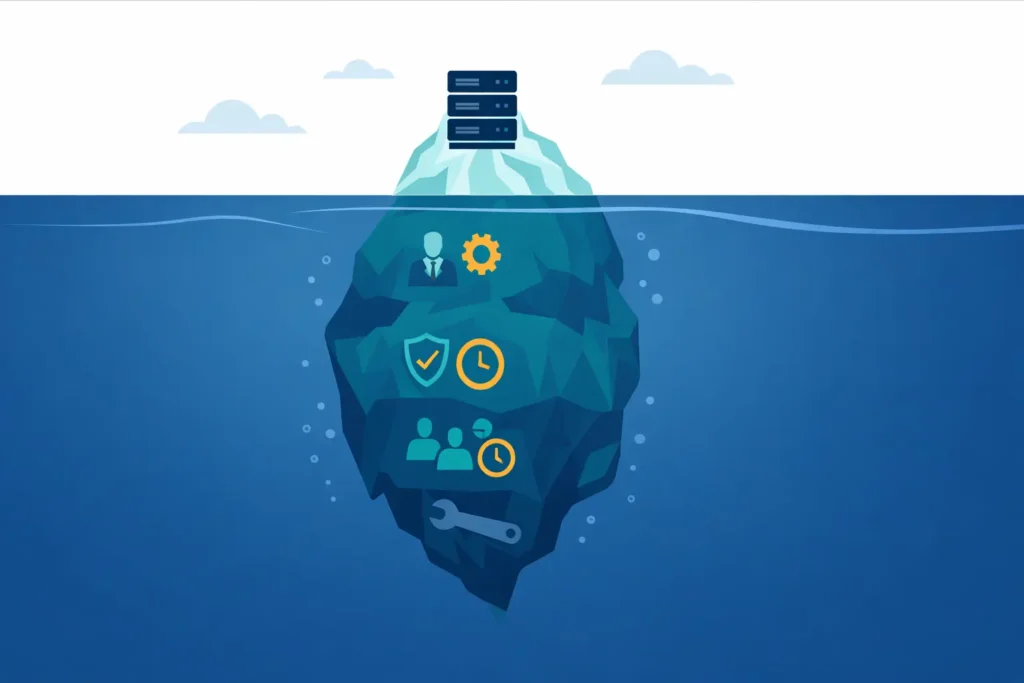

The Hidden Costs of Self-Hosting

Infrastructure costs represent only part of the self-hosting equation. Several hidden expenses deserve consideration.

GPU Hardware Investment

Running capable models requires significant GPU resources. A single NVIDIA H100 GPU costs $25,000-40,000, with the newer H200 at $30,000-40,000. However, Mixture-of-Experts (MoE) architectures have changed the equation: Llama 4 Scout (109B total parameters, 17B active) fits on a single H100 with quantization, making capable self-hosted AI more accessible than previously required dense models. Hardware refreshes every 2-3 years add ongoing capital requirements.

Operational Expertise

Self-hosted AI infrastructure demands specialized operations. Model updates, security patches, performance optimization, and troubleshooting require dedicated expertise. Hiring or training this capability represents a significant investment.

Reliability Engineering

Production AI services require high availability. Redundancy, failover systems, monitoring, and incident response add complexity and cost. API providers handle this transparently; self-hosting places the burden on your team.

Opportunity Cost

Engineering resources devoted to AI infrastructure cannot be used for core product development. For most businesses, infrastructure is not a competitive differentiator. Delegating it to specialized providers frees talent for higher-value work.

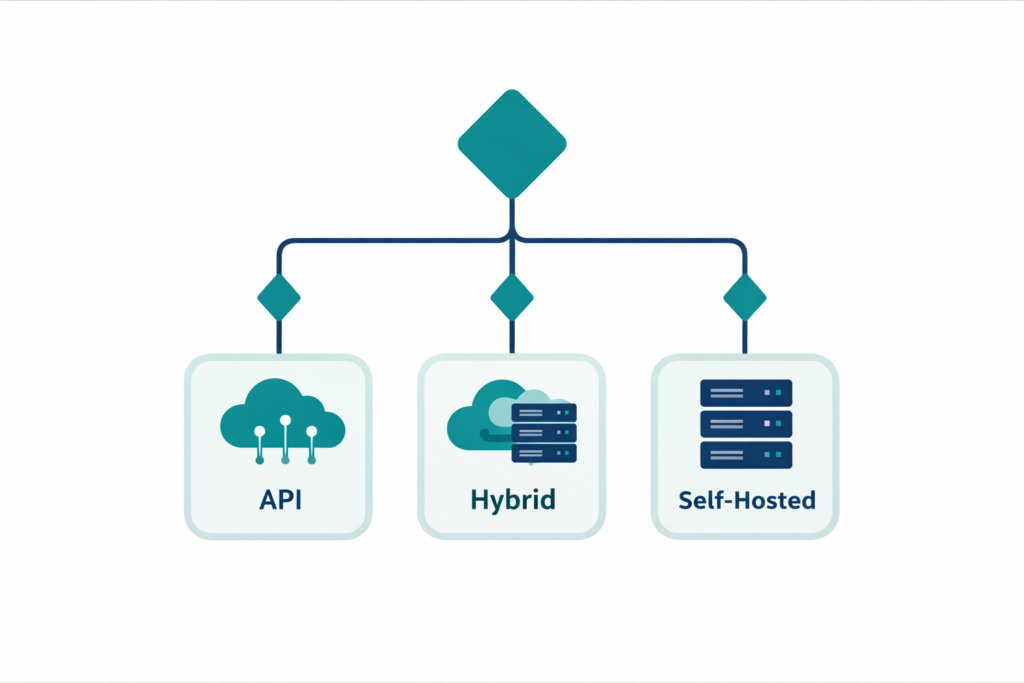

Hybrid Approaches: The Practical Middle Ground

Many organizations adopt hybrid strategies that leverage the benefits of both approaches.

Tiered Model Routing

Route simple tasks to self-hosted models while sending complex queries to cloud APIs. A self-hosted Llama 4 Scout or DeepSeek model handles routine classification. Complex reasoning escalates to Claude Opus 4.6 or GPT-5. This approach optimizes cost while maintaining access to capabilities.

Development vs Production

Use self-hosted models for development and testing to avoid API costs during iteration. Deploy to cloud APIs for production where reliability matters. This preserves experimentation velocity while ensuring production stability.

Fallback Architecture

Maintain self-hosted capability as a fallback when APIs experience outages or rate limiting. Primary traffic flows through cloud APIs; self-hosted infrastructure activates during disruptions. This provides resilience without a full self-hosting investment.

Decision Framework for CTOs

Evaluate your situation against these criteria to guide your decision.

Start with APIs If:

- Monthly AI requests are under 500,000

- Usage patterns are unpredictable or seasonal

- You need frontier model capabilities (GPT-5, Claude Opus 4.6, Gemini 3 Pro)

- Your team lacks GPU infrastructure experience

- Time-to-market is critical

- Data sensitivity does not prohibit external processing

Consider Self-Hosting If:

- Monthly requests exceed 1-2 million with predictable patterns

- Data must remain within your infrastructure for compliance

- Latency under 50ms is required

- You need extensive model customization

- Your team has GPU and ML operations expertise

- Long-term cost optimization is prioritized over initial simplicity

Adopt Hybrid When:

- You need both frontier capabilities and cost efficiency

- Different use cases have different requirements

- Resilience and redundancy are priorities

- You want to build self-hosting capability gradually

Open-Source Models Worth Considering

If self-hosting makes sense for your situation, several open-source models offer compelling capabilities.

Llama 4 Scout (Meta): A Mixture-of-Experts model with 109B total parameters (17B active). Fits on a single H100 GPU with quantization. Offers an industry-leading 10 million token context window—permissive licensing for commercial use.

Llama 4 Maverick (Meta): The larger sibling with 400B total parameters (17B active) and 128 experts. Matches GPT-4-class performance on many benchmarks. Requires a multi-GPU H100 setup. Supports 1 million token context.

DeepSeek V3.2 / R1: Ultra-low-cost via API ($0.14/$0.28 per million tokens for V3.2) and available for self-hosting. R1 offers strong reasoning capabilities, making it a compelling option for cost-sensitive deployments.

Mistral Large 3: A 675B MoE model with 256K context window. Excellent for European data residency requirements. Priced competitively at $0.50/$1.50 per million tokens via API.

How Pegotec Approaches This Decision

Our AI integration projects begin with usage analysis and requirement assessment. We help clients understand their actual needs rather than making assumptions about infrastructure requirements.

For most clients, we recommend starting with cloud APIs and the optimization techniques covered in our earlier articles. Caching, prompt optimization, and model routing typically reduce API costs by 40-70%, making self-hosting unnecessary for all but the highest-volume applications.

When self-hosting makes sense, we design hybrid architectures that optimize cost while maintaining access to frontier capabilities. Our Laravel expertise enables clean integration patterns that make infrastructure decisions transparent to application code.

Conclusion

The decision between self-hosting and using an API should be carefully analyzed rather than assumed. Cloud APIs suit most businesses thanks to their simplicity, access to cutting-edge models, and flexible pricing. Self-hosting becomes compelling at high volumes, with sensitive data, or when latency matters critically.

Most organizations benefit from hybrid approaches that leverage the advantages of both models. Start with APIs, optimize aggressively, and consider self-hosting only when usage patterns and requirements clearly justify the investment.

Evaluating AI infrastructure options for your organization? Contact Pegotec to discuss how our experience across both approaches can help you make the right choice for your specific situation.

FAQ Section About Self-Hosted AI vs API

The breakeven point typically falls between 500,000 and 2 million monthly requests, depending on model size and infrastructure costs. Below this threshold, API pay-per-use pricing usually wins. Above it, dedicated infrastructure delivers significant savings.

Open-source models have dramatically closed the gap. Llama 4 Maverick matches GPT-4-class performance on many benchmarks, and DeepSeek R1 delivers strong reasoning capabilities. However, the absolute frontier—GPT-5.2, Claude Opus 4.6, Gemini 3 Pro—still leads on the most demanding tasks. Hybrid approaches let you access frontier capabilities when needed.

Requirements depend on model architecture. Modern MoE models like Llama 4 Scout (109B total, 17B active parameters) fit on a single NVIDIA H100 GPU with quantization. Larger models like Llama 4 Maverick (400B total) require a multi-GPU H100 setup. Cloud GPU instances (H100 from ~$2.10/hour, H200 from ~$2.50/hour) offer flexibility without hardware purchase.

Yes, with proper architecture. Abstract AI calls behind service interfaces that support multiple backends. This enables gradual migration, A/B testing between approaches, and fallback capabilities without application changes.

Need help with your project?

Book a free 30-minute consultation with our developers. No strings attached.